ElderSim: A synthetic data generation platform for human action recognition in eldercare applications

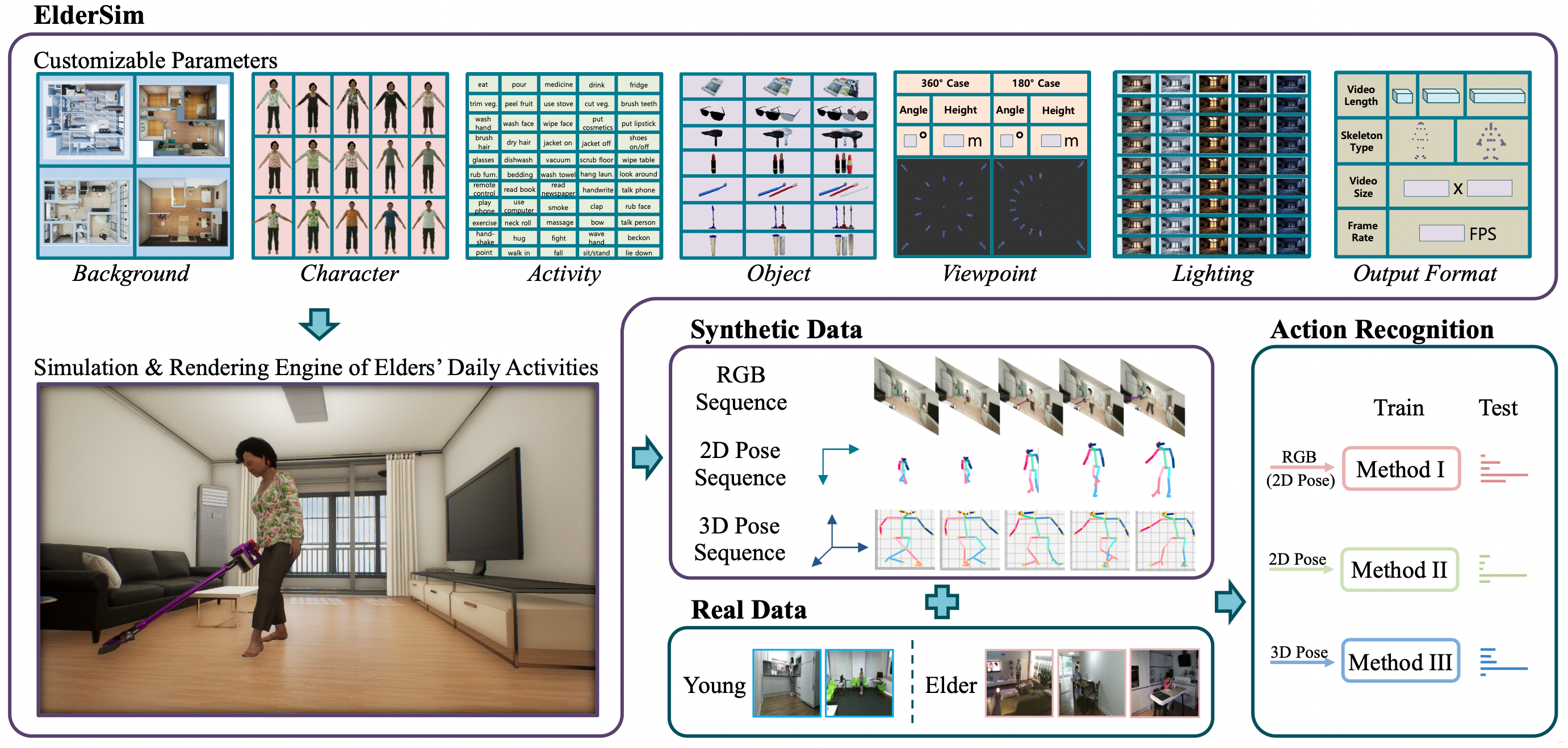

Abstract: To train deep learning models for vision-based action recognition of elders’ daily activities, we need large-scale activity datasets acquired under various daily living environments and conditions. However, most public datasets used in human action recognition either differ from or have limited coverage of elders’ activities in many aspects, making it challenging to recognize elders’ daily activities well by only utilizing existing datasets. Recently, such limitations of available datasets have actively been compensated by generating synthetic data from realistic simulation environments and using those data to train deep learning models. In this paper, based on these ideas we develop ElderSim, an action simulation platform that can generate synthetic data on elders’ daily activities. For 55 kinds of frequent daily activities of the elders, ElderSim generates realistic motions of synthetic characters with various adjustable data-generating options and provides different output modalities including RGB videos, two- and three-dimensional skeleton trajectories. We then generate KIST SynADL, a large-scale synthetic dataset of elders’ activities of daily living, from ElderSim and use the data in addition to real datasets to train three state-of-the-art human action recognition models. From the experiments following several newly proposed scenarios that assume different real and synthetic dataset configurations for training, we observe a noticeable performance improvement by augmenting our synthetic data. We also offer guidance with insights for the effective utilization of synthetic data to help recognize elders’ daily activities.

Synthetic data generation

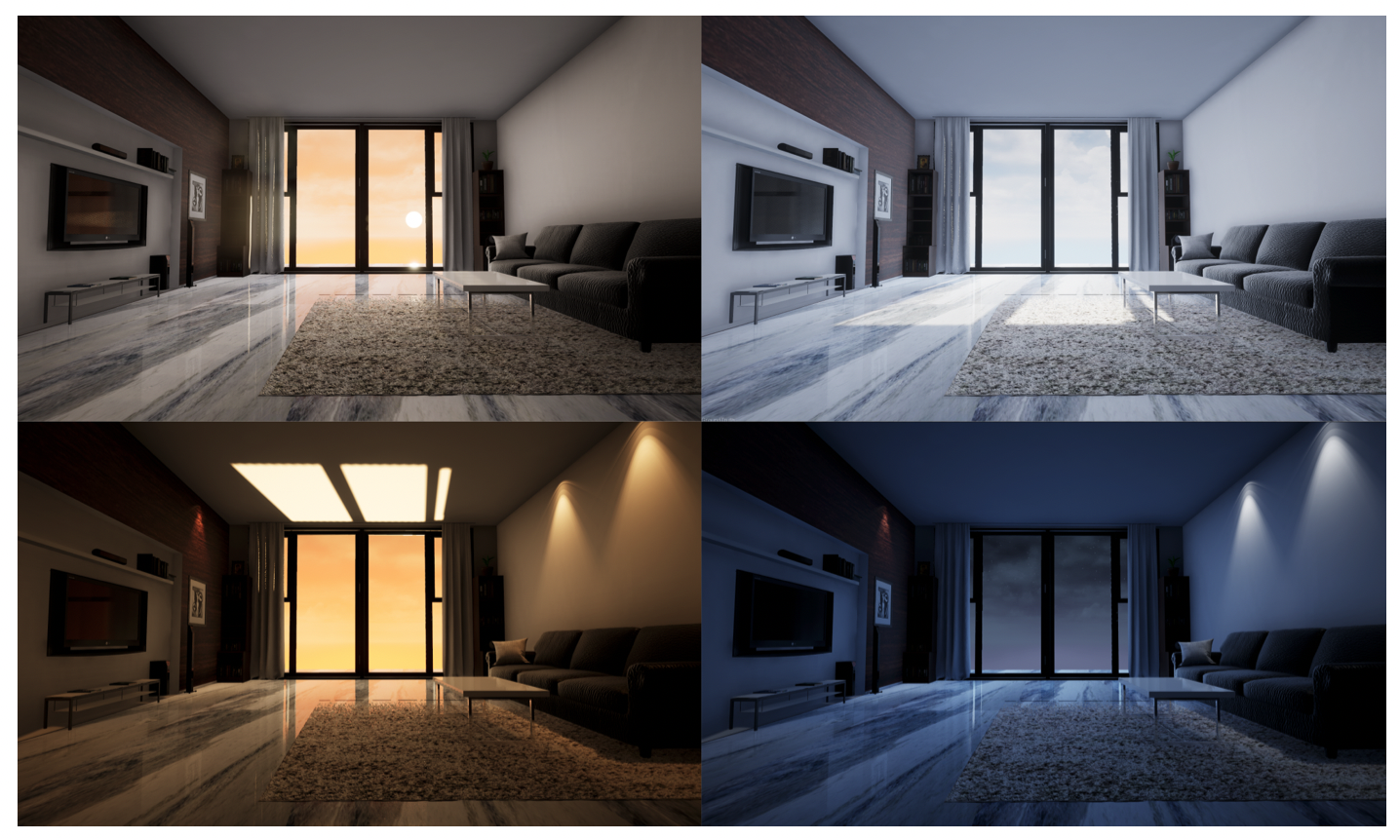

The world’s elderly population growth emphasizes the necessity of eldercare technologies and underlines the role of action recognition tasks to comprehend elders’ activities of daily living. However, most public datasets used in human action recognition either differ from or have limited coverage of elders’ activities in many aspects. Moreover, data acquisition of elders’ ADL is challenging due to the privacy and physical limitations of the elderly. We introduce ElderSim, a synthetic action simulation platform that can generate synthetic data on elders’ daily activities. For 55 kinds of frequent daily activities of the elders, ElderSim generates realistic motions of synthetic characters with several customizable data-generating options and provides several output modalities. We also provide KIST SynADL dataset which is generated from our simulation platform.

Human action recognition

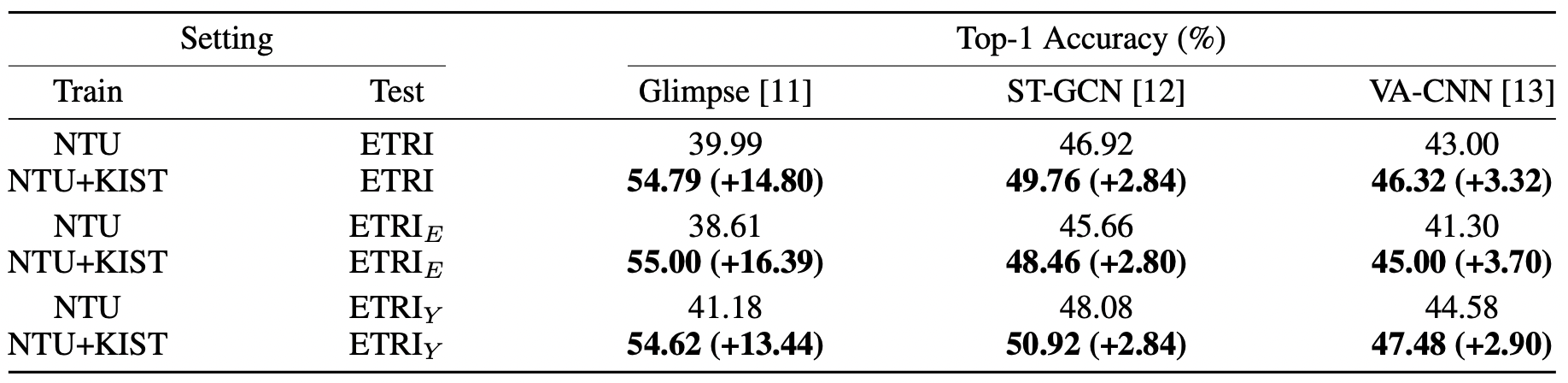

We experimentally validated the effect of augmenting our synthetic data, KIST SynADL (KIST) for training algorithms to recognize elders' ADL. We begin by introducing two real-world datasets for the experiments and address how insufficient the existing public dataset (NTU RGB+D 120) is to cover the elders' ADL. We then describe three state-of-the-art HAR methods used in the experiments as well as several experimental scenarios to examine the various aspects arising from the recognition of the elders' ADL. Within each experimental scenario, we investigate how our synthetic data can help recognize elders' daily activities and offer some guidance and insights for effective utilization of synthetic data.

We also collected real-world elderly action data to evaluate our synthetic dataset with three different HAR algorithms.

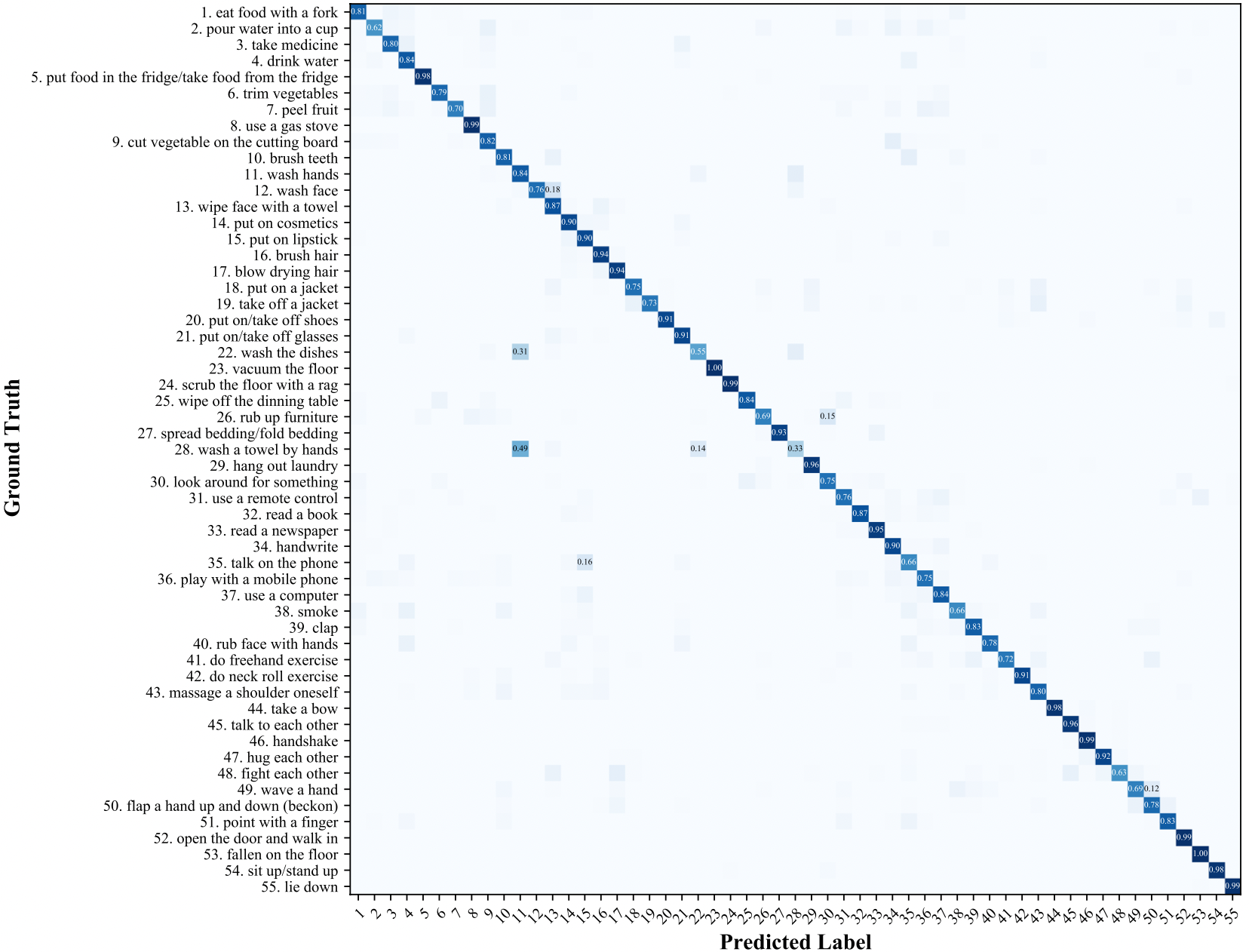

For the experiments augmenting the KIST SynADL dataset to train ST-GCN and VA-CNN, we balance mini-batches to contain an equal amount of real and synthetic data. Since the sizes of datasets differ, we randomly upsample the dataset of a smaller amount (usually the real-world data) to match the size. Twenty viewpoints of the KIST SynADL dataset were utilized for both methods, while only eight viewpoints were used for the Glimpse method to ensure reasonable training time. We report the results of the experiments performed according to the above settings. In the experiments, we trained three recognition algorithms for the proposed experimental splits and report the average video sequence-level top-1 classification accuracy for the five test trials as the action recognition score. For the results obtained from augmenting the KIST SynADL dataset, we designate the change in the recognition score from that obtained without augmentation in the parenthesis next to the score. For simplicity, I only share the results of the cross-dataset split and the confusion matrix for the cross-subject split. You can find more experimental results in our paper.